If you start a career in data engineering in 2025, you will be entering a rapidly evolving field. Thanks to AI, data pipelines, orchestration, and even coding are chagingin rapiadly. But foundational skills still matter. This guide will help you bridge that gap — from traditional best practices to the AI-driven future.

I wrote about the core responsibilities of data engineers back in 2022. Now, with the landscape rapidly changing, it feels like the right time to share an updated perspective. This guide provides practical advice, key skills to concentrate on, and a glimpse of what is next, whether you are a recent graduate or transitioning to data engineering.

The Transformation of Data Engineering by Artificial Intelligence

The answer is simple: AI.

You might think AI is your downstream user responsibility. Data engineering plays a supporting role in all the modeling, training, and analytics. Data engineering’s daily tasks do not incorporate AI.

It is changing right now. With LLMs and MCP (Model Context Protocol), data engineering coding is no longer a unique skill. You could feed your most recent schema, documentation, and requirements to AI and let LLMs + MCP understand your input and use the MCP toolbox to resolve the complexity behind the scenes.

I wrote an article where you can find out more about this process — The AI Wake-Up Call for Data Engineers: Why LLMs + MCP Matter Now

AI becomes an extension of your team, assisting you with development, debugging, and brainstorming. Data engineering integration with artificial intelligence is an unavoidable trend.

The Skills That Still Matter (SQL, Python, Cloud)

If we could accomplish everything with AI, would we still need SQL or Python knowledge?

Yes, they are still a mandatory skill.

AI can help you code, but it is not always correct. You can have AI write a complex project, but how do you know what it does and what data source it uses? Is it privacy compliant? Does it meet my requirements? It is still up to you, the data engineer reading this article, to decide.

AI can help you with some of your coding and writing unit tests. The fun fact is we are now reviewing more code that was not written by a human.

Begin by establishing a solid foundation in SQL for data manipulation, focusing on fundamentals such as SELECT statements and joins, window functions, and query optimization.

Python is still the most essential for scripting and data processing (via libraries such as Pandas and PySpark), and consider Java or Scala for Spark and Flink.

Learn by Building: Projects That Teach You More

While taking an online data engineering course will provide you with the fundamentals of the field, you will learn far more by working on projects.

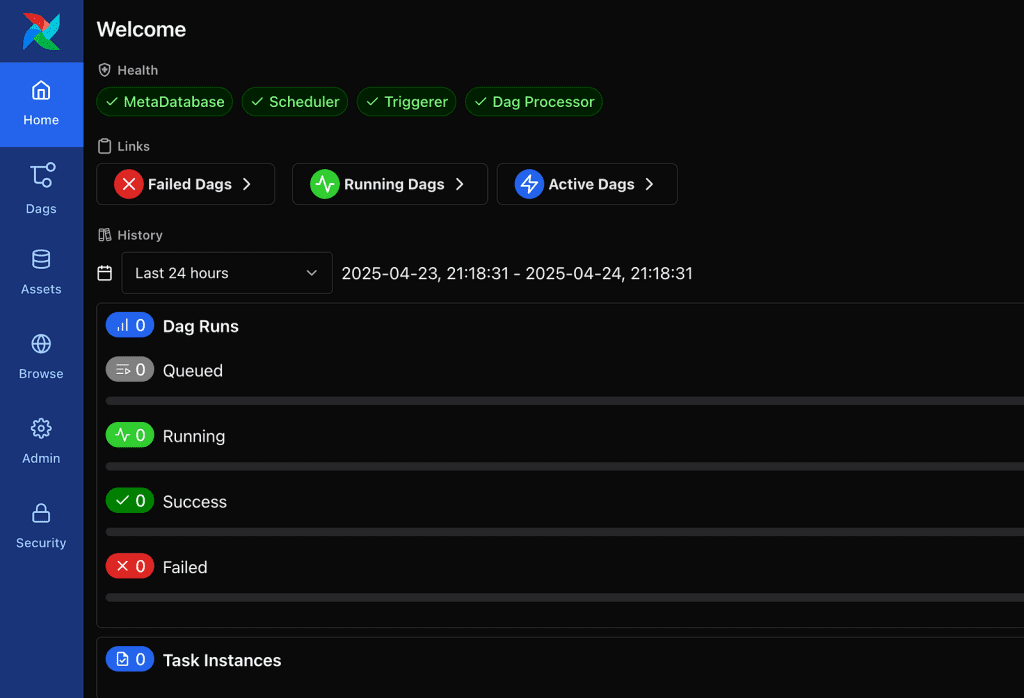

Knowing theory is not enough; real-world experience with tools like Apache Airflow for orchestration, dbt for data transformations, and Spark or Flink for large-scale processing can make a big difference.

These projects demonstrate your understanding of the entire data lifecycle while also providing you with something tangible to showcase in interviews or your portfolio. Furthermore, every project provides an opportunity to learn how modern data stacks behave in the wild, which no tutorial can fully teach.

By creating an actual web crawler and scheduling it in Airflow, you will learn far more than you would by reading the documentation. In the real world, your Airflow DAGs won’t always stay green — and that’s where the real learning begins. We build a project assuming everything works flawlessly, but in the real world, you must deal with failures, backfill, data loss, alerting, error debugging, SLA misses, and so on.

Cloud Fluency Sets You Apart

Unless you’re attempting a hackathon that involves stacking multiple Raspberry Pis as servers, you will almost certainly work in a cloud environment.

Whether it is AWS, GCP, or Azure. Each platform provides different services, but the fundamentals are the same: you must understand how to efficiently store data, process it at scale, and orchestrate workflows using services.

Getting hands-on experience with these tools — spinning up pipelines, managing permissions, and monitoring jobs — allows you to move beyond theory.

If you are concerned about receiving unexpectedly high bills, you can try applying for AWS credits for startups as a personal project. The ability to navigate cloud consoles, manage costs, and understand how services integrate is increasingly distinguishing junior engineers from those prepared to work on real-world systems.

The first edition of Ralph Kimball's The Data Warehouse Toolkit introduced the industry to dimensional modeling,and now his books are considered the most authoritative guides in this space. This new third edition is a complete library of updated dimensional modeling techniques, the most comprehensive collection ever. It covers new and enhanced star schema dimensional modeling patterns, adds two new chapters on ETL techniques, includes new and expanded business matrices for 12 case studies, and more.

- Authored by Ralph Kimball and Margy Ross, known worldwide as educators, consultants, and influential thought leaders in data warehousing and business intelligence

- Begins with fundamental design recommendations and progresses through increasingly complex scenarios

- Presents unique modeling techniques for business applications such as inventory management, procurement, invoicing, accounting,customer relationship management, big data analytics, and more

- Draws real-world case studies from a variety of industries,including retail sales, financial services, telecommunications,education, health care, insurance, e-commerce, and more

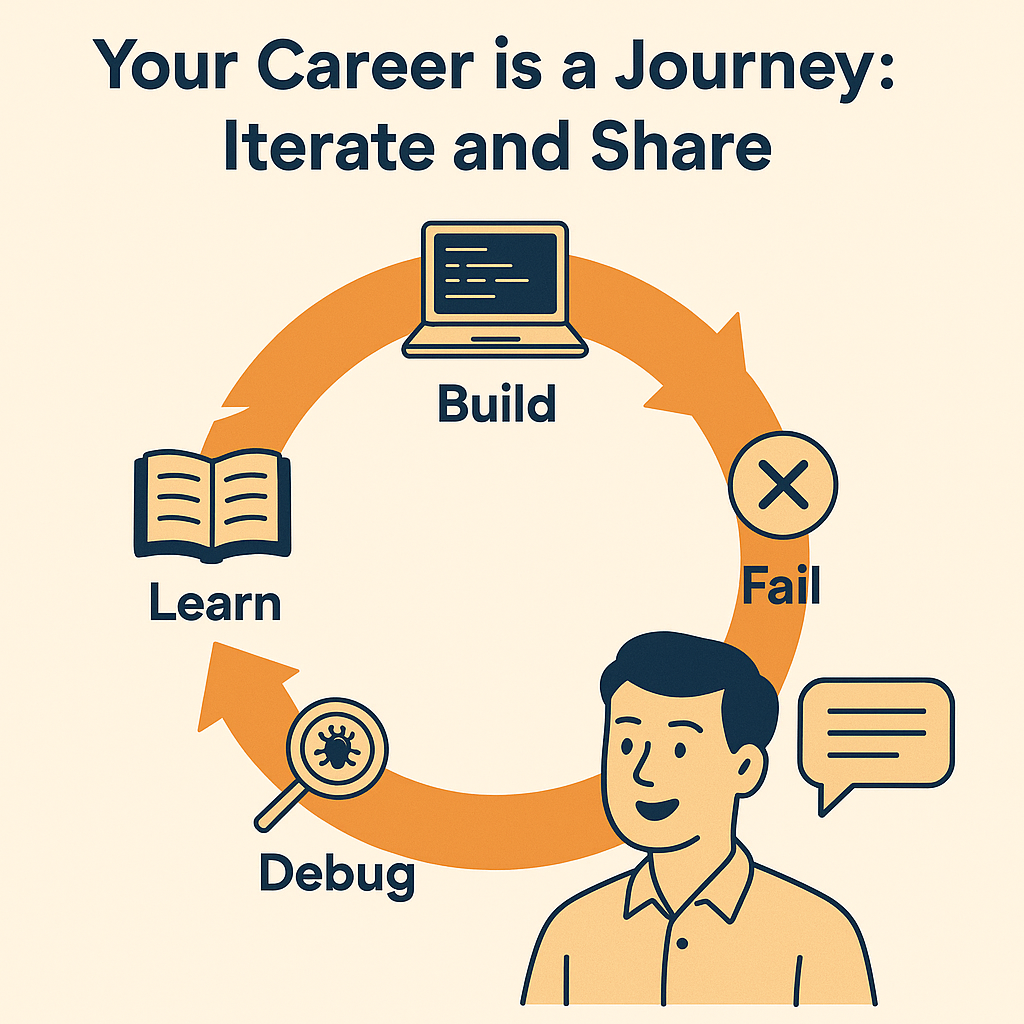

Your Career is a Journey: Iterate and Share

Continuous learning is the most valuable skill that a data engineer can acquire during the initial stages of their career. The frameworks, tools, and best practices are undergoing rapid evolution. Given this, it is important to gain a sense of curiosity and continue to strengthen your knowledge.

Follow blogs from teams that create the tools you use, such as dbt Labs, Astronomer — Airflow, and Databricks—Spark.

Do not simply consume; share what you are learning. Write about your experiences, create a repository about the code you wrote on GitHub, or even give a talk at a local meetup and network with people.

The journey into data engineering is characterized by a series of iterations where you learn, adapt, and grow. The tools will change. The buzzwords will shift. But your ability to think critically, build real systems, and stay curious will always set you apart.

So build. Break things. Debug them. Share your process. Whether you’re using AI to write tests, fix Airflow DAGs, or optimize an SQL query for the hundredth time—you’re doing the work that matters. Welcome to the field. Let’s see what you build next.

Final Thoughts

In 2025, data engineering is not solely concerned with the transfer of data from one location to another; it is also about comprehending the broader context. You are not merely constructing pipelines; you are establishing the groundwork for product decisions, machine learning, and analytics. AI operations, software engineering, and data engineering are rapidly becoming less distinct.

Focus on impact rather than trends. Furthermore, it is important to remember that the best data engineers are more than just technical experts; they are also collaborative problem solvers and systems thinkers.

TL;DR for New Data Engineers (2025)

- Embrace AI tools (LLMs + MCP) as part of your dev toolkit.

- SQL and Python are still core skills —don’t skip them.

- Build real-world projects, not just portfolios.

- Get hands-on with at least one cloud platform.

- Keep learning, iterating, and sharing publicly.

About Me

I hope my stories are helpful to you.

For data engineering post, you can also subscribe to my new articles or becomes a referred Medium member that also gets full access to stories on Medium.