If you are learning data engineering or preparing for a system design interview, you will inevitably face a difficult question: “What is the difference between at-least-once and exactly-once processing?”

It sounds like academic jargon, but it is one of the most practical problems in modern computing. Whether you are using Apache Flink, Kafka, or AWS Lambda, choosing the wrong guarantee can lead to lost data or duplicated financial transactions.

In this guide, we will break down the three types of data processing guarantees using a simple, real-world analogy: online shopping.

What are data processing guarantees?

In distributed systems, moving data from Point A (Source) to Point B (Destination) is messy. Servers crash, networks fail, and messages get lost. A processing guarantee is the rule set a system uses to handle these failures.

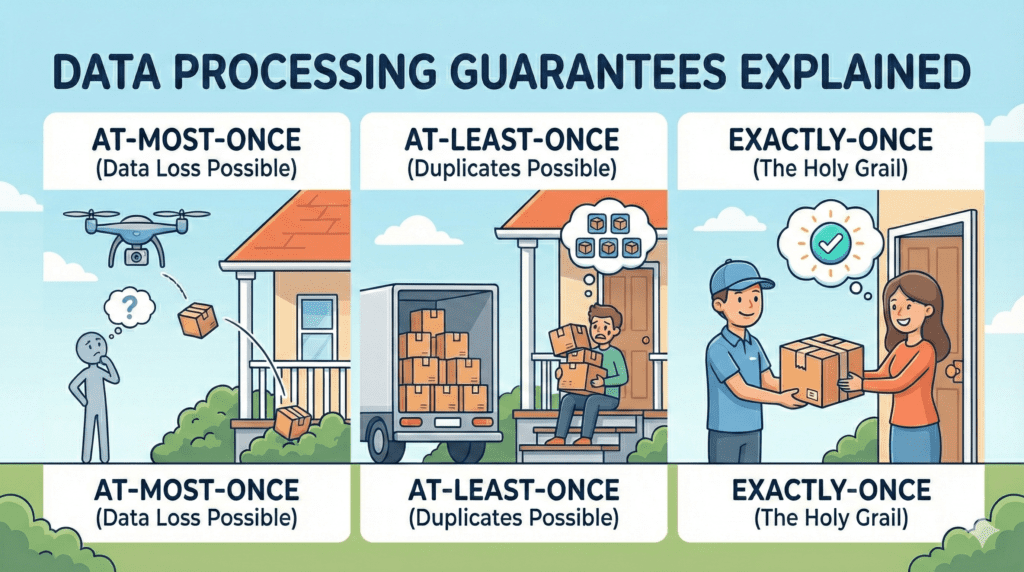

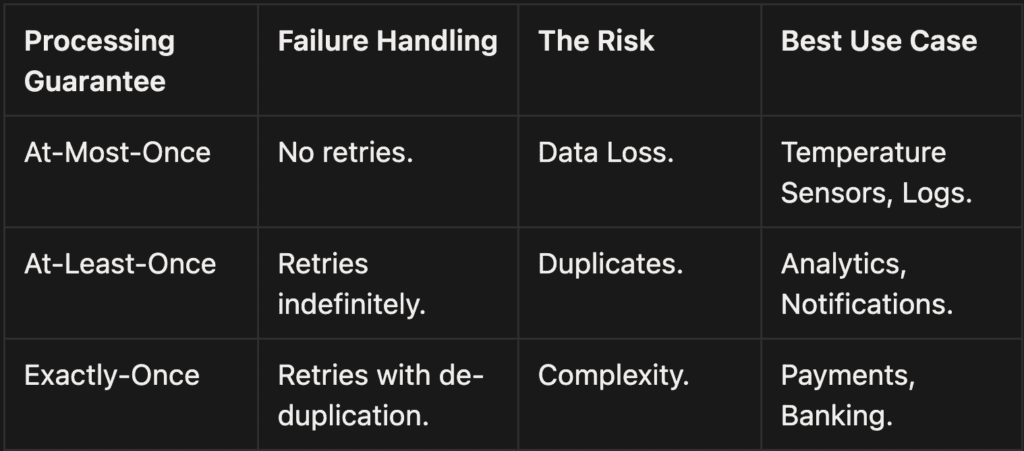

There are three industry standards:

- At-Most-Once: Data might be lost, but never duplicated.

- At-Least-Once: Data is never lost but might be duplicated.

- Exactly-Once: Data is processed once and only once (the gold standard).

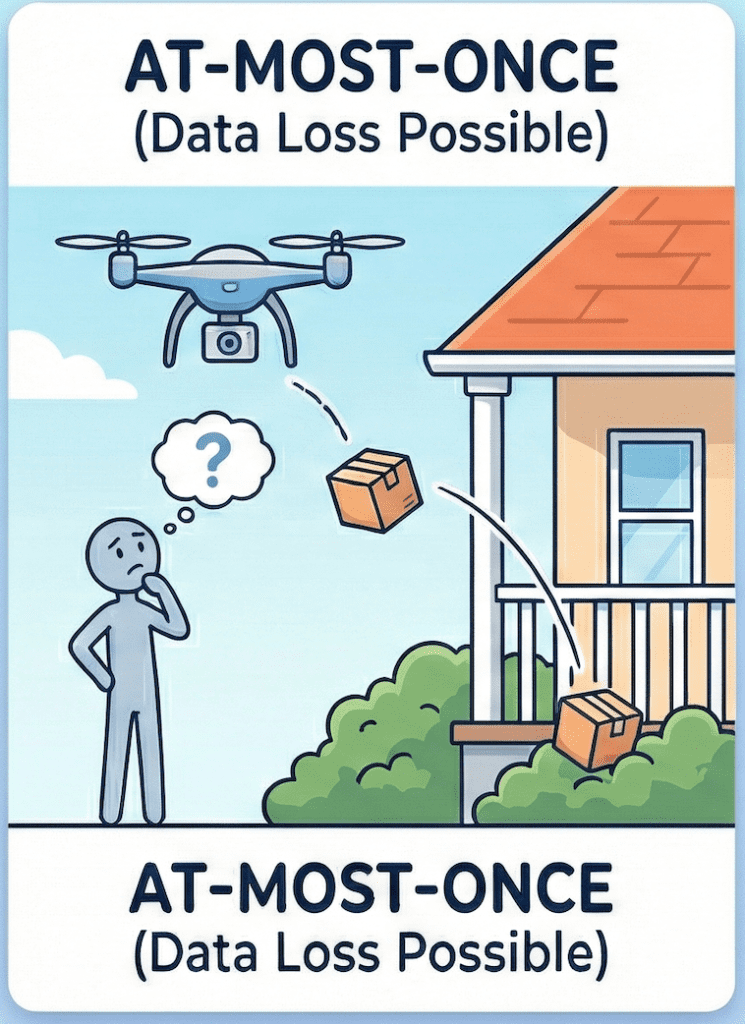

1. At-Most-Once Delivery (The "Fire and Forget" Approach)

Imagine you are buying a laptop online. You click the “Buy Now” button, but your Wi-Fi cuts out at that exact second.

- The Scenario: Your browser sent the request, but you don’t know if the store received it.

- The Rule: You shrug your shoulders and close the tab. You do not click the button again.

- The Outcome: Best Case: The store got the order. You get your laptop. Worst Case: The store never heard you. You get nothing.

Technical Summary

In At-Most-Once processing, the system sends the data packet and doesn’t wait for an acknowledgment. It prioritizes speed over accuracy.

- Pros: Lowest latency, highest throughput.

- Cons: Data loss is possible during failures.

- Use Case: IoT Sensor Data. If you are measuring room temperature every second and miss one reading (e.g., at 12:01:05), it doesn’t matter because the 12:01:06 reading is coming immediately.

How is it Technically Accomplished?

This is the simplest method to implement. The producer (your application) sends a message to the messaging system (like Kafka) in a “fire-and-forget” manner.

The producer uses an asynchronous send operation and does not wait for an acknowledgment (ACK) from the broker to confirm receipt. Once the message leaves the producer, its job is done. If the network fails while the message is in transit, the message is simply lost forever because the producer has already moved on to the next task.

Key Mechanism: Asynchronous send with no acknowledgment.

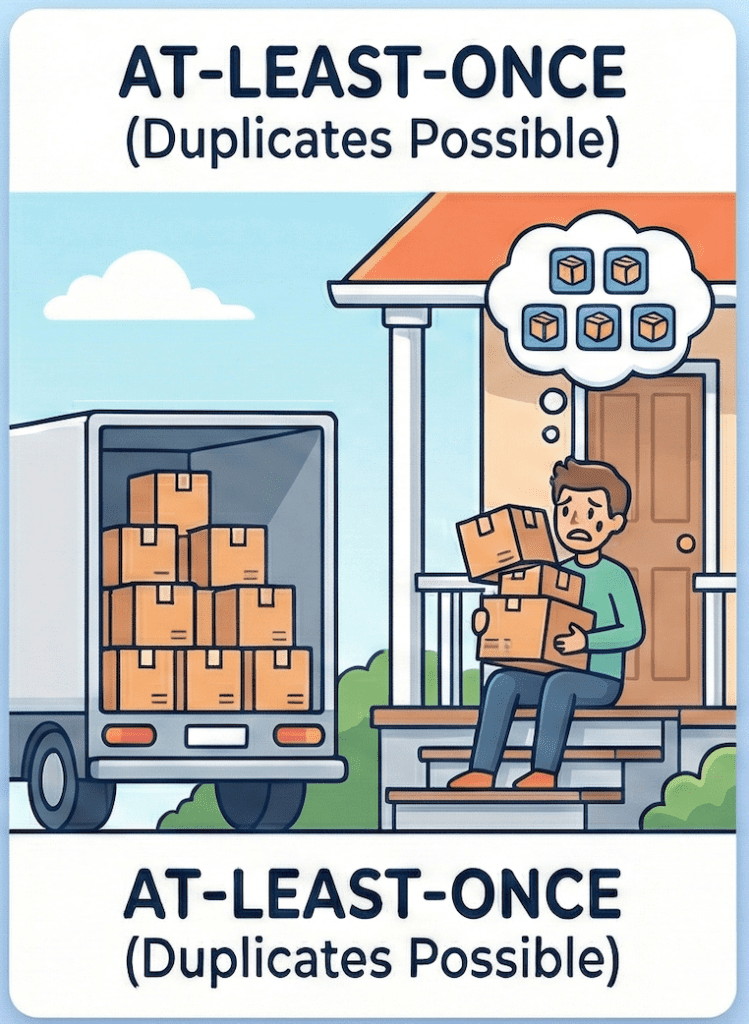

2. At-Least-Once Delivery (The "Guaranteed Delivery" Approach)

Let’s go back to the laptop purchase. You click “Buy Now,” but the screen freezes. You don’t get a confirmation.

- The Scenario: You are terrified the order didn’t go through.

- The Rule: You start furiously clicking the “Buy Now” button again. And again. You do this until you finally see a “Thank You” screen.

- The Outcome: The store actually received your first click, but their reply got stuck. They also received your 5 panic clicks. Three days later, 6 laptops show up at your door.

Technical Summary

In At-Least-Once processing, the system retries sending data until it receives a confirmation. This guarantees no data is lost, but it often results in duplicate data.

- Pros: Zero data loss.

- Cons: You must handle duplicates downstream (idempotency).

- Use Case: Mobile Push Notifications or Ad Tracking. It is better to accidentally show a user a notification twice than for them to miss an urgent alert entirely.

How is it Technically Accomplished?

This relies on a system of acknowledgments (ACKs) and retries.

- The producer sends a message and starts a internal timer.

- It keeps a copy of that message in a local buffer.

- It waits for the broker to send back an ACK saying, “I got it and wrote it to disk.”

- If the timer expires before the ACK arrives (perhaps the network failed, or the ACK itself was lost), the producer assumes failure and retries sending the buffered message.

- Because the failure could have been a lost ACK, the broker might receive the same message two or three times. It writes all of them down, leading to duplicates.

Key Mechanism: Retry-on-timeout based on missing ACKs.

- Learn concepts and challenges of distributed stateful stream processing

- Explore Flink’s system architecture, including its event-time processing mode and fault-tolerance model

- Understand the fundamentals and building blocks of the DataStream API, including its time-based and statefuloperators

- Read data from and write data to external systems with exactly-once consistency

- Deploy and configure Flink clusters

- Operate continuously running streaming applications

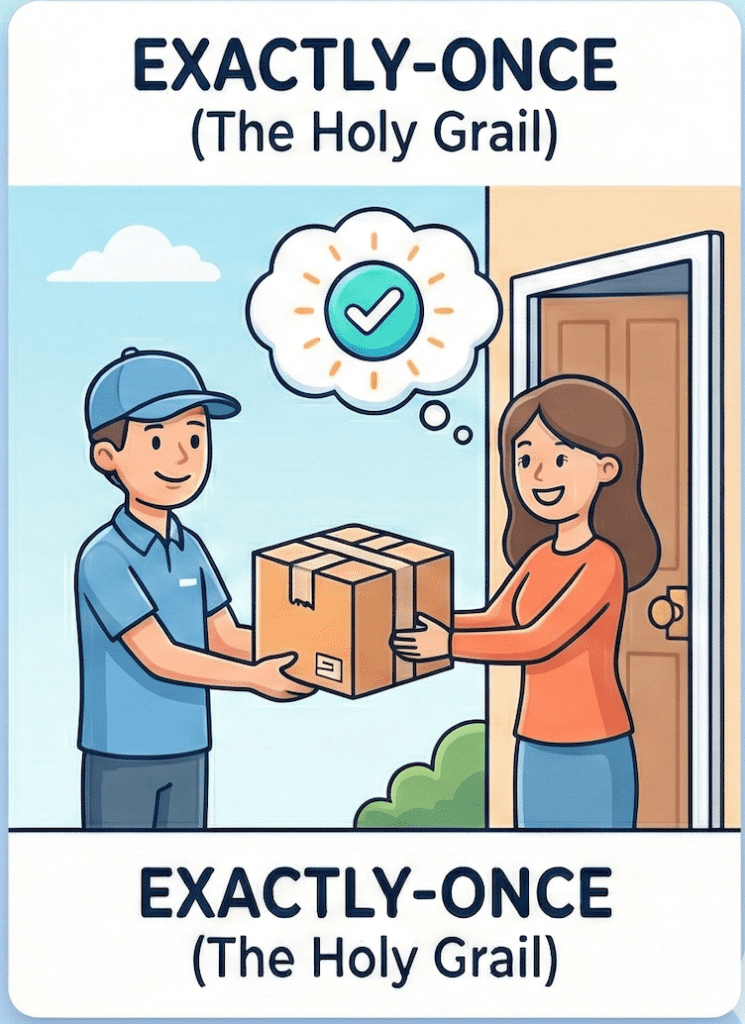

3. Exactly-Once Delivery (The Holy Grail)

This is the perfection engineers strive for. You click “Buy Now,” the screen freezes, and you panic-click the button 10 more times.

- The Scenario: The store receives 11 total clicks from you.

- The Rule: The store’s system is smart. It checks the unique Order ID (state). It sees that it already has a record for “Order #555.”

- The Outcome: The store processes the first click. It recognizes the next 10 clicks as duplicates and discards them — but still sends you a “Success” message so you stop clicking. You get one laptop and are charged once.

Technical Summary

Exactly-Once processing ensures that even if machines fail and retry, the final state is updated only once. Technologies like Apache Flink are famous for solving this difficult problem using checkpoints and state management.

- Pros: 100% accuracy.

- Cons: Higher complexity and slightly higher latency.

- Use Case: Financial Transactions, Inventory Management, and Billing. You cannot deposit a check twice, and you cannot lose it.

How is it Technically Accomplished?

Achieving end-to-end exactly-once is complex and requires coordination across the entire pipeline: the source, the processing engine, and the sink (destination).

- Replayable Source: The source must be able to replay old data. For example, Kafka allows consumers to “rewind” to a previous offset and re-read messages if a crash occurs.

- Stateful Processing & Checkpoints: The processing engine (like Apache Flink) needs to remember what it has already done. It does this by periodically taking a checkpoint — a consistent snapshot of its entire state (e.g., “I have processed up to offset 100 in Kafka, and the current counts are X and Y”). This snapshot is saved to durable storage like S3. If the job crashes, it recovers by loading the last successful checkpoint and replaying the data stream from that exact point.

- Idempotent or Transactional Sink: The destination must handle potential duplicate writes that happen during recovery.

- Idempotency: The simplest way. If you write

(OrderID: 555, Status: Paid)to a database five times, the result is the same as writing it once. Key-value stores are great for this. - Two-Phase Commit (Transactional): For systems that aren’t naturally idempotent, the processor and sink work together in a transaction. Data is first “pre-committed.” Only when the processor completes a checkpoint successfully does it tell the sink to “commit” the data, making it visible.

Key Mechanism: Coordination using checkpoints, replayable sources, and idempotent/transactional sinks.

Summary Comparison Table

Final Thoughts

When designing a data pipeline, don’t just ask “How do I move this data?” Ask yourself, “What is the cost of an error?”

- If losing a few events is acceptable, choose At-Most-Once.

- If you can tolerate duplicates (or de-duplicate later), choose At-Least-Once.

- If accuracy is critical to the business (money is involved), you need Exactly-Once.

About Me

I hope my stories are helpful to you.

For data engineering post, you can also subscribe to my new articles or becomes a referred Medium member that also gets full access to stories on Medium.