For an extended period of time, the data engineering toolbox has not been updated. The data engineering batch and streaming process is dominated by Apache Spark and Apache Flink, and there has been a lack of new and exciting frameworks.

With the impressive output from DeepSeek, checking on their data engineering framework is also interesting for data engineers. Then I found they have built a lightweight open-source project called SmallPond.

What is DeepSeek SmallPond?

A lightweight data processing framework built on DuckDB and 3FS. — From smallpond repository

DeepSeek SmallPond is a cloud-based platform that is intended to simplify the deployment of AI models, machine learning, and data analysis.

SmallPond leverages DuckDB, which is an in-process SQL OLAP database management system. As it is optimized for OLAP queries, it fits perfectly as a computation layer for building any data engineering pipeline workload.

In order to expand SmallPond to multiple clusters, it employs Ray Clusters, which enable the seamless scaling of workloads from a laptop to a large cluster.

Why choose SmallPond?

The purpose of SmallPond is to optimise resource utilisation for teams that do not necessitate the full capabilities of a distributed computing framework, such as Spark. It is particularly well-suited for workloads that are small to medium in size.

SmallPond can serve as an effective prototyping framework for AI startups or medium-volume companies, thereby reducing the amount of time required to establish the necessary infrastructure.

SmallPond Example

Data Source: Top Spotify Songs in 73 Countries — CC0: Public Domain

SmallPond has good instruction to getting started here

Let’s write an analytics query to get the average popularity for each artist and rank them in descending order.

In order to shuffle data and distribute it to various nodes using Ray, SmallPond currently requires users to provide partition instructions.

The hash of columns is a common method for performing aggregation analysis, and there are a few ways to perform repartition.

df = df.repartition(5) # repartition by files

df = df.repartition(5, by_row=True) # repartition by rows

df = df.repartition(5, hash_by="artists") # repartition by hash of column

Then we can call partial_sql to execute the query and store the result into 5 partitions to a location

import smallpond

# Initialize session

sp = smallpond.init()

# Load data

df = sp.read_parquet("data/spotify_songs.parquet")

# Process data

df = df.repartition(5, hash_by="artists")

df = sp.partial_sql(f"""SELECT artists, avg(popularity) avg_popularity

FROM {0} GROUP BY artists

ORDER BY avg_popularity DESC""", df)

# Save results

df.write_parquet("output/")

# Show results

print(df.to_pandas())

Logic is comparable to that which is typically implemented in a Spark job.

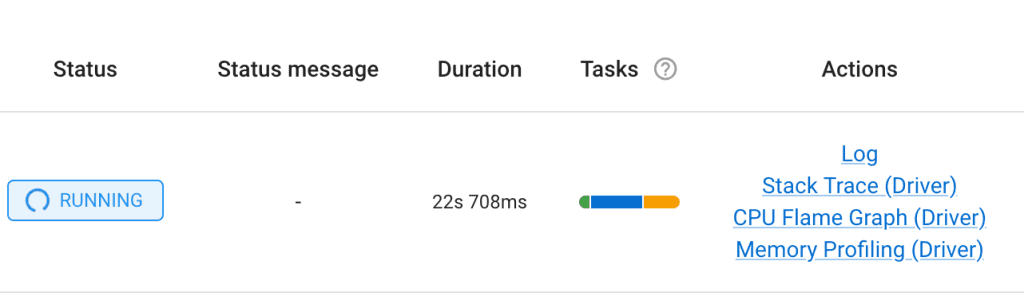

Furthermore, it is feasible to supervise the job’s advancement and the DAG’s progress by examining CPU flame graphs, logs, and other pertinent data. We can simply scale this application using Ray clusters once you feel comfortable with the local testing.

Final Thoughts

DeepSeek SmallPond is a data processing project that is lightweight. We can see its potential for expansion even if it is still in its early stages. The SmallPond project can serve as an additional tool for your data engineering or artificial intelligence project by fusing scalability and ease of use. Now is the ideal moment to get started with SmallPond and see what this cutting-edge platform can offer you.

About Me

I hope my stories are helpful to you.

For data engineering post, you can also subscribe to my new articles or becomes a referred Medium member that also gets full access to stories on Medium.