Recently, Apache Airflow 3.0 launched with numerous exciting new features. While many of the features are exciting to use, Apache Airflow 3.0 also introduces a new way of writing Directed Acyclic Graphs (DAGs). Some of the new methods introduce breaking changes, and directly coding a DAG from Apache Airflow 2.x to 3.0 may no longer function as expected.

I knew there were a few breaking changes, and I used to look them up online to ensure I adopted them. As LLM and MCP evolve, I believe this is a wonderful opportunity to demonstrate our ability to auto-update Apache Airflow 3.0 without any hassle.

Apache Airflow 3.0 Setup

The easiest way to set up Apache Airflow is to use the official Airflow Docker image and Composer to spin up the instance.

You can learn more detail about the setup in my previous post

Unboxing Apache Airflow 3.0: What’s New, What’s Gone, and Why It Matters

Explore the latest features, UI updates, and key changes in Apache Airflow 3.0. This deep dive covers DAG versioning, event-driven scheduling, Docker setup, and more for data engineers and workflow automation pros.

Direct Ask LLM to Write Down Airflow DAG

I used Gemini Pro 2.5 and used Cline to write the Airflow DAG for me.

Let’s start with a simple prompt:

Write a new airflow dag that uses PythonOperator to print out current execution date

The new file called print_execution_date_dag.py are created

from __future__ import annotations

import pendulum

from airflow.models.dag import DAG

from airflow.operators.python import PythonOperator

# 1. Define the Python function

def print_execution_date_fn(**kwargs):

execution_date = kwargs['ds'] # 'ds' is the execution date as YYYY-MM-DD

print(f"Current execution date is: {execution_date}")

# 2. Define the DAG

with DAG(

dag_id='print_execution_date_dag',

start_date=pendulum.datetime(2023, 10, 26, tz="UTC"), # Adjust as needed

schedule_interval='@daily', # Adjust as needed

catchup=False,

tags=['example'],

) as dag:

# 3. Create the PythonOperator

print_date_task = PythonOperator(

task_id='print_date_task',

python_callable=print_execution_date_fn,

)

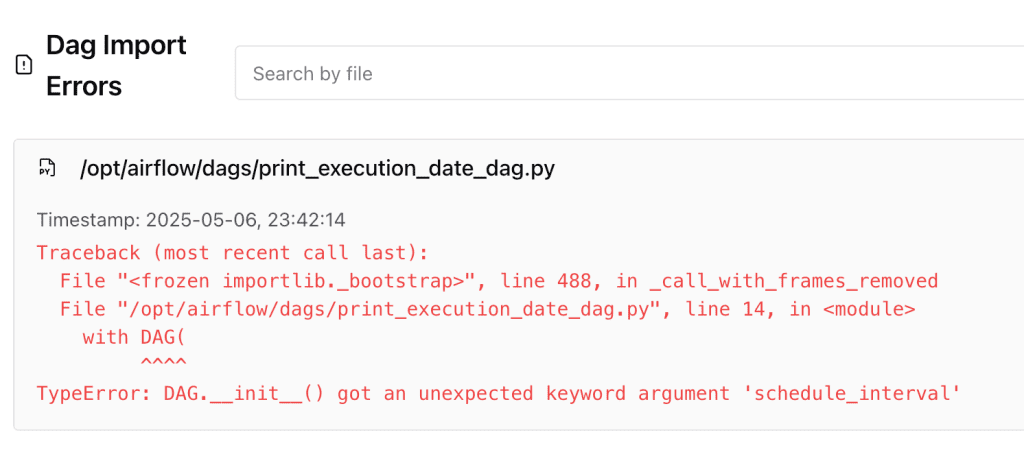

There isn’t anything wrong with the code above. However, the DAG itself wasn’t compatible with Airflow 3.0. When you put this file in the DAG folder and let Airflow 3.0 parse it, you will see the following errors.

The reason is that Airflow 3.0 changed the keyword argument from schedule_interval to schedule, which is a breaking change if you migrate your code from Airflow 2.x to 3.0. Because the LLM Gemini Pro 2.5 was trained before the release of Airflow 3.0, it is unfamiliar with the new syntax and cannot process this request.

LLM + MCPs in Action for Airflow 3.0 DAG Update

We are eager to incorporate the updated Airflow 3.0 into the next release of Gemini Pro 2.5 for training purposes. So the question is, how can we provide the updated documentation of Airflow 3.0 to the LLM model so it learns from the new syntax?

We provide the Airflow 3.0 documentation via MCP to LLM so that it becomes aware of the necessary changes.

MCP (Model Context Protocol) is an open standard for connecting AI to databases and tools, similar to an API layer designed specifically for LLM. Instead of having each team build individual integrations with DuckDB, Snowflake, or Spark, tool providers define a shared interface. LLMs then connect to it seamlessly.

We will use Cline to interact with MCP and LLM and write code. We currently lack an MCP server to host the Apache Airflow documentation. Ideally, a special Airflow MCP server could give organized access to updates on APIs, function details, and migration guides straight from the Airflow project, designed for easy use by LLMs.

However, we can use Browserbase, which is a web browser for AI agents and applications. Browserbase can help us fetch the Airflow 3.0 documentation.

First, we need to configure the Browserbase MCP server file with the proper API key and project ID.

"github.com/browserbase/mcp-server-browserbase": {

"autoApprove": [],

"disabled": false,

"timeout": 60,

"command": "node",

"args": [

"~/Cline/MCP/browserbase/browserbase/dist/index.js"

],

"env": {

"BROWSERBASE_API_KEY": "",

"BROWSERBASE_PROJECT_ID": ""

},

"transportType": "stdio"

}

Once Browserbase is enabled, we can modify our prompt

Write a new airflow dag that uses PythonOperator to print out current execution date. Please note there is a new version of Airflow 3.0, please search latest documentation of Airflow 3.0 in https://airflow.apache.org/docs/apache-airflow/stable/tutorial/fundamentals.html and update the code compatible with Airflow 3.0

You will see the following plan, and it mentions it will search online with the latest documentation.

Okay, I understand. You want a new Airflow DAG that uses a `PythonOperator` to print the current execution date, and you want to ensure the code is compatible with Airflow 3.0, referencing the documentation at `https://airflow.apache.org/docs/apache-airflow/stable/tutorial/fundamentals.html`.

I will use the browser tools to access the documentation and confirm the best practices for Airflow 3.0 for this task.

Here's the refined plan:

1. __Access Documentation__: I'll use the browser tools to navigate to the provided URL and extract the text content to understand the Airflow 3.0 fundamentals, focusing on DAG definition and `PythonOperator` usage.

2. __Formulate DAG Code__: Based on the documentation and standard Airflow practices, I will formulate the Python code for the DAG. This will involve:

- Defining a Python function to print the execution date (available via `kwargs['ds']`).

- Defining the DAG object with appropriate parameters (`dag_id`, `start_date`, `schedule_interval`, `catchup`, `tags`).

- Instantiating a `PythonOperator` to execute the defined function.

3. __Propose Code and Filename__: I will present the proposed DAG code to you. We also need to decide on a filename. Since `dags/print_execution_date_dag.py` already exists, would you like to:

- Overwrite `dags/print_execution_date_dag.py`?

- Create a new file, for example, `dags/airflow_3_print_date_dag.py`?

4. __Implementation__: Once you approve the plan, the code, and the filename, and switch me to ACT mode, I will write the file.

Let's start by accessing the documentation. I'll first create a browser session.

Now let’s switch Cline from plan to act mode.

You will first see Browserbase go to the Airflow 3.0 foundation page and extract the entire website. The Cline uses that information to feed into the LLM model.

Now we can see the updated code. Notice the schedule='@daily'this is the key correction the LLM made after consulting the Airflow 3.0 documentation via Browserbase, resolving the earlier error.

from __future__ import annotations

import pendulum

from airflow.models.dag import DAG

from airflow.operators.python import PythonOperator

def print_execution_date_callable(**kwargs):

execution_date_str = kwargs['ds']

print(f"Current execution date (ds): {execution_date_str}")

with DAG(

dag_id='airflow_3_print_date_dag', # Updated dag_id

start_date=pendulum.datetime(2023, 1, 1, tz="UTC"),

schedule='@daily',

catchup=False,

tags=['airflow3', 'example'],

doc_md="""### Print Execution Date DAG

This DAG demonstrates the use of the PythonOperator to print the current execution date.

It is written based on Airflow 3.0 fundamentals.

""",

) as dag:

print_date_task = PythonOperator(

task_id='print_the_date',

python_callable=print_execution_date_callable,

)

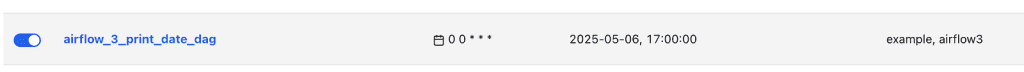

n the Airflow web UI, you can see the new DAG works fine in Airflow 3.0. It rendered itself properly without any issues.

Final Thoughts

We successfully addressed the difficulty of moving to Apache Airflow 3.0 and its updated DAG syntax by enhancing our LLM with real-time access to documentation through Browserbase and using an MCP approach.

The initial mistake, caused by the LLM’s training data being outdated, was quickly fixed once we asked the LLM to read the latest documentation for Airflow 3.0.

I want to highlight the critical importance of providing LLMs with context and appropriate tools. It is capable of producing excellent results and taking the LLM model to the next level.

About Me

I hope my stories are helpful to you.

For data engineering post, you can also subscribe to my new articles or becomes a referred Medium member that also gets full access to stories on Medium.