Airflow is a premier Apache project within the data community. Apache Airflow joined the Apache Incubator in March 2016. Numerous impressive features have been introduced over the past nine years, and the community is now eagerly anticipating the forthcoming major release—Airflow 3.0.

According to the astronomer website (https://www.astronomer.io/airflow/3-0/) and Airflow 3.0 workstream, we can preview the anticipated new features, and I will share my initial thoughts on those features for Airflow 3.0

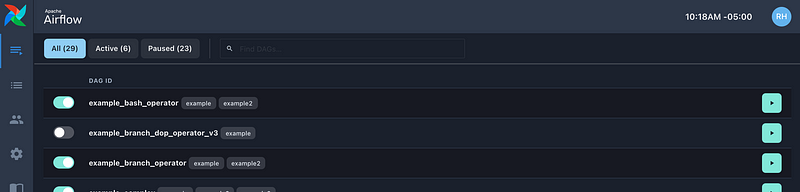

Modern UI

Feature Description: React-based UI with support for embedded plug-ins. Improved navigation, real-time refresh, and dark mode option.

Personal Excitement Level: 9/10

AIP-38 Modern Web Application is the core proposal of the modern UI for Airflow. This is a comprehensive redesign of the existing UI, utilizing the following primary technologies: React, TypeScript, and Chakra UI.

By the time I wrote this blog post, the page supposed to show the POC demo UI is down: https://nova.astronomer.io/

A screenshot of AIP-38 provides a preview of the appearance and user experience of Apache Airflow 3.0. Although it definitely looks modern in 3.0, I’d expect it still takes some time to practice to get familiar with Airflow 2.0 to 3.0. I also hope that the redesign won’t lead to a loss of essential functionality.

DAG Versioning

Feature Description: the challenges faced by Airflow users in understanding and managing DAG history, and this feature aims to improve it.

Personal Excitement Level: 8/10

This is a highly anticipated feature in Airflow 3.0. Numerous teams urgently require DAG versioning in Airflow. Current Airflow always runs the latest code of a DAG, even if it is part way through a DAG run or even a task if retries are required.

This causes many problems: deploying a DAG while you have jobs running could lead to unexpected results or orphan tasks. You have a difficult time telling the data is generated by which version of DAG. The historical execution via previous DAG version will be hidden from the UI.

The extent of the effort required to implement this DAG version change is remarkable. You can learn more about this change from this talk

More Languages Support for Task Creation

Feature Description:Initially Go, Java, Javascript, and Typescript. Support for additional languages is planned in the future. Language sequence may change based on demand. DAGs will still be written in Python.

Personal Excitement Level: 7/10

When I initially read about this feature, I thought we would be able to write DAG in other languages. However, this is not what it means. The DAG in Python still remains the same.

Does Airflow 3.0 support DAG authoring in additional languages? No, DAGs will still be written in Python. Airflow 3 will be multilingual, so that DAGs generated from a DagFactory or a custom DSL, or a DAG natively written in Python can invoke Tasks written in any language By extending task support for other languages, Airflow can integrate with application APIs written in Java, Javascript or Typescript.

Currently, Python is the sole programming language utilized in Airflow Task. Airflow 3.0 permits the creation of Airflow tasks in languages other than Python. This means we can enable interfaces for task execution with support for multiple language bindings. We have the ability to initiate tasks that feature libraries that are exclusively written in Golang.

Expanding it to additional languages would facilitate the adoption of Airflow by individuals proficient in those languages. Numerous SaaS platforms offer support for multiple languages for selection.

Event-driven Scheduling

Feature Description: This feature aims to introduce native event-driven capabilities to Airflow, allowing users to create workflows that can be triggered by external events, thus enabling more responsive data pipelines.

Personal Excitement Level: 9/10

One of the fundamental components of Apache Airflow is a scheduler, engineered for time-based and dependency-driven orchestration of workflows. Currently in Airflow, one can construct event-driven workflows utilizing external task sensors, sensors/deferrable operators, REST API, or datasets.

The event-driven trigger in Airflow allows us to utilize assets like S3 or SQS messages to initiate a job, rather than requiring a separate process to continuously monitor upstream datasets. The comprehensive solution will be significantly more clear and succinct.

trigger = S3FileUpdatedTrigger(s3_file="<s3_file_uri>")

asset = Asset("<s3_file_uri>", watchers=[trigger])

with DAG(

dag_id=DAG_ID,

schedule=asset,

start_date=datetime(2021, 1, 1),

tags=["example"],

catchup=False,

):

empty_task = EmptyOperator(task_id="empty_task")

chain(empty_task)

Final Thoughts

The Alpha and Beta versions of Apache Airflow 3.0 are set for release in January and February 2025, respectively, with the final public version anticipated on March 31, 2025. I am highly enthusiastic about the forthcoming major release of Airflow, as it represents a significant milestone for the Airflow community. Once the new version releases, I will have more information to share with everyone. Thanks for reading!

About Me

I hope my stories are helpful to you.

For data engineering post, you can also subscribe to my new articles or becomes a referred Medium member that also gets full access to stories on Medium.